Simultaneous Orientation of Images and Laser Scans from Mobile Mapping Platforms

| Team: | Y. Chen, F. Rottensteiner, C. Brenner |

| Jahr: | 2018 |

| Förderung: | CSC (Chinese Scholarship Council) |

| Laufzeit: | Since 2018 |

| Ist abgeschlossen: | ja |

Motivation

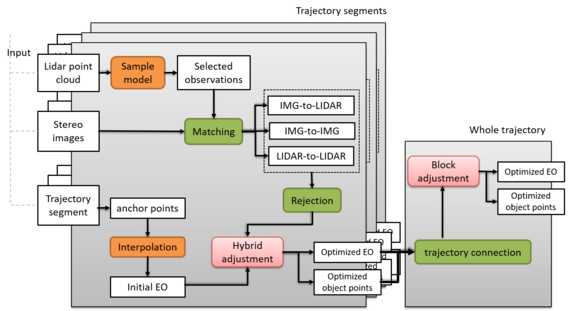

Lidar and photogrammetry are important techniques for the 3D topographic mapping. Due to complementary strengths of both techniques, many 2D/3D applications integrate this two kind of data to generate more accurate and complete results. For general solution to the registration between image and laser scan data, the GNSS/INS will provide accuracy exterior orientation, and the scan strip adjustment and SFM photogrammetry are carried out independently. In fact, this kind of isolated orientation method can produce large discrepancies between lidar and image block, which could be a challenging for subsequent registration. For another, due to the instability of GNSS in urban area with buildings and trees, the accuracy of exterior orientation will be dramatically decreasing. In order to solve this, we proposed the hybrid adjustment system, which integrates laser scan data, image, and direct GNSS/INS observations into a common adjustment, to mitigate such problems. This kind of method can achieve more accurate absolute and relative orientation of lidar and image data, due to the introduction of the additional geometric constraints. Currently, the existing hybrid adjustment approach can only deal with a mean density of approximately 10 points/m2 and with a maximum number of a few hundreds of aerial images, that is not enough for practice application. So our research focuses on extending existing hybrid adjustment model and to find a solution to deal with large scale image and lidar blocks.

Current method

We divided our research into three parts: photogrammetric processing, rigorous integration and global bundle adjustment for large block. Currently, we are working on the first part. In this part, we mainly work on the two most time consuming problems: trajectory interpolation model, combined adjustment with image and direct trajectory observations.

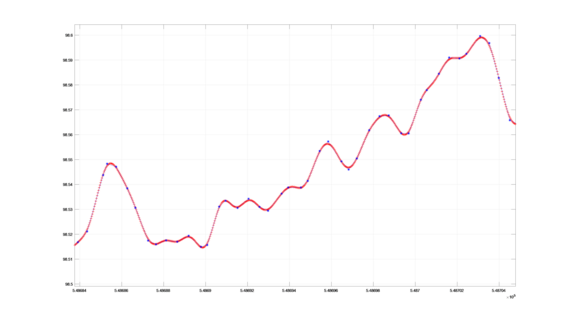

- Trajectory Interpolation Model: We proposed a distance-based trajectory model to reduce the number of direct trajectory observations. We defined anchor point according to the travel distance, and the original trajectory data are introduced as observed variables to estimate the six elements of those points. Several strategies will be use to refine the result. This is what we call the direct trajectory model. (See figure 3)

- Image Orientation (Perspective Model): Under the assumption that the trajectory between anchor points is linear, the exterior orientation of those images can be derived from those anchor points. With those points we could get a quite good initial value which can dramatically simplify the SFM (Structure-from-Motion) model. This is what we call the perspective model.

- Combined Adjustment: We use combined adjustment instead of traditional SFM approach. This method will integrate direct trajectory observations and images into a common adjustment. With this hybrid adjustment model, we could Simultaneously adjust the pose of our anchor points and the object points.