SILKNOW

| Team: | D. Clermont, D. Wittich, M. Dorozynski, F. Rottensteiner |

| Year: | 2018 |

| Funding: | EU (Horizon 2020 Programm) |

| Duration: | 2018 - 2021 |

| Is Finished: | yes |

| Further information | silknow.eu |

Project description

SILKNOW (“Silk heritage in the Knowledge Society: from punched cards to big data, deep learning and visual/tangible simulations”) is a project which has received funding from the European Union’s Horizon 2020 research and innovation program under grant agreement No. 769504. It is the goal of the project to improve the understanding, conservation and dissemination of European silk heritage from the 15th to the 19th century. It applies next-generation computing research to the needs of diverse users (museums, education, tourism, creative industries, media…), and preserves the tangible and intangible heritage associated with silk.

Based on records from existing catalogues, it aims to produce digital modelling of weaving techniques (a “Virtual Loom”), through automatic visual recognition, advanced spatio-temporal visualization, multilingual and semantically enriched access to digital data. Its research activities and output have a direct impact in computer science and big data management, focusing on searching digital content throughout heterogeneous, multilingual and multimodal databases.

IPI is the leader of WP 4 of the SILKNOW project that is related to the application of deep learning for the analysis of images. Our research in this project is focused on two main topics:

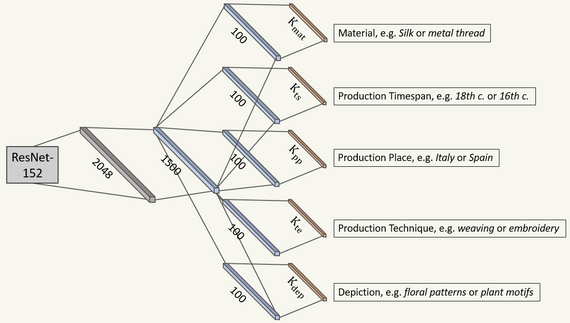

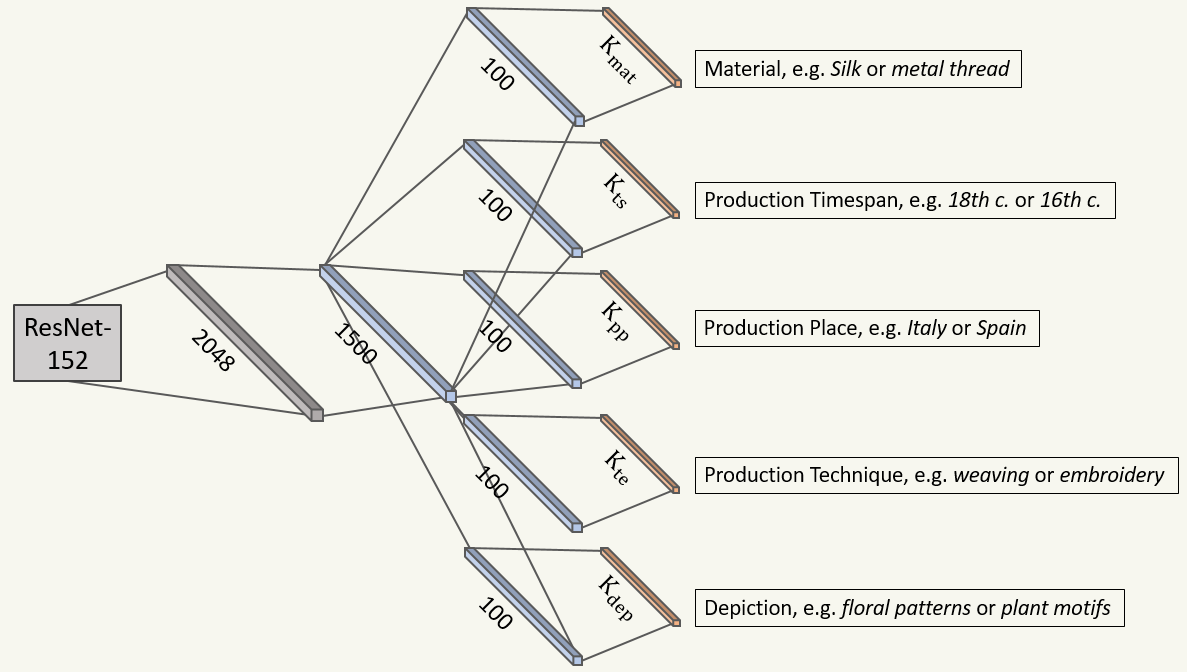

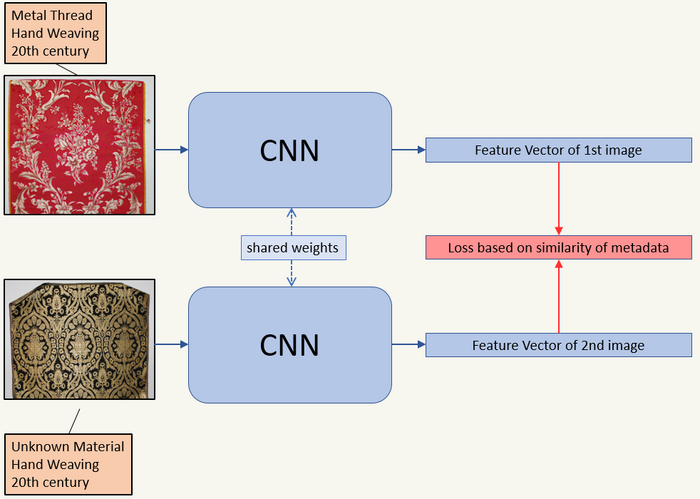

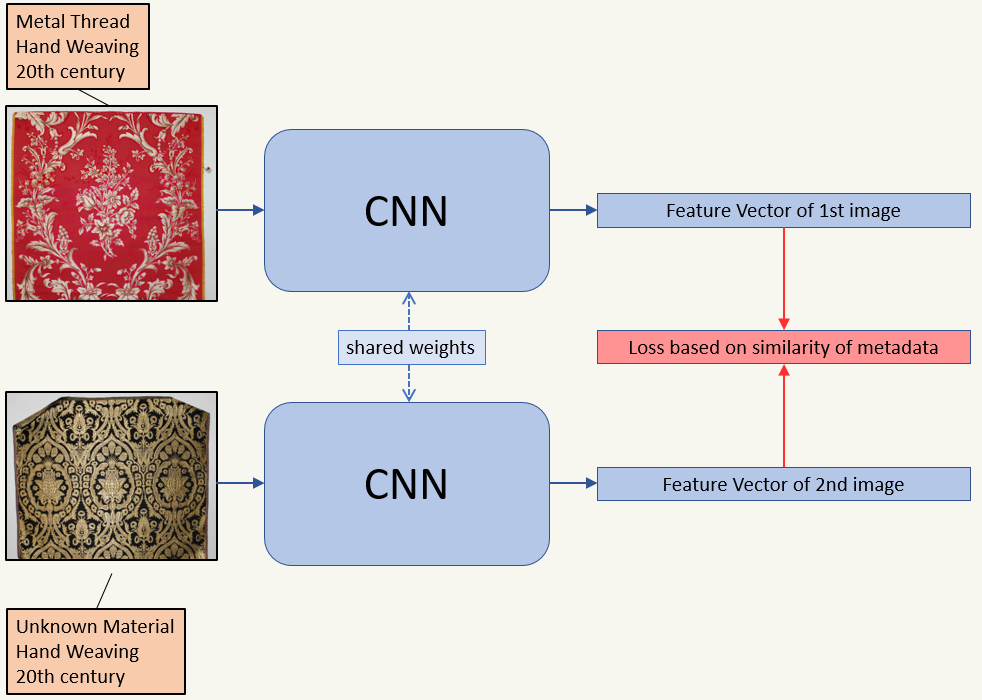

- Classification of images of silk fabrics using convolutional neural networks (CNN): The goal of the classification is the automatic prediction of certain properties of silk fabrics, such as the production place, timespan or technique (cf. Fig. 1). To this end, we are training CNNs using Multi-Task-Learning, which also takes advantage of the inherent dependencies between the variables to be predicted (cf. Fig. 2). One major challenge in this project is the automatic generation of training samples from collections where the annotations for some properties of the samples are unavailable. Using this methodology, the missing information can be predicted automatically for the incomplete samples, thus adding additional information about these samples that can be included as metadata in digital collections.

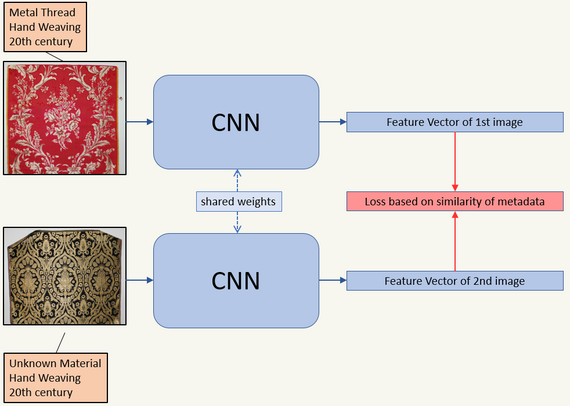

- Image Retrieval based on Siamese CNN: In order to achieve this goal, we want to implement a CNN that produces a characteristic feature vector that can be used for indexing images in a database. This index is to be used for retrieving images from the database. After training a Siamese CNN, the CNN is applied to all available images to produce such a feature vector, and a spatial index is built on the basis of these vectors. If a new image is presented to the retrieval software, the CNN is used to extract the feature vector from this image, and using this feature vector, the N nearest feature vectors and, consequently, the N most similar images are found in the database. In this way, a user can find information about a new sample by resorting to the meta-information about similar samples in the database. The main challenge is the definition of the training samples. In Siamese CNN training, a sample consists of a pair of images and the information whether they are similar or not. Our notion of similarity builds on similarity of metadata because this information can be extracted automatically from existing collections (cf. Fig. 3).

Publications

Dorozynski, M.; Clermont, D.; Rottensteiner, F. (2019): Multi-task deep learning with incomplete training samples for the image-based prediction of variables describing silk fabrics. In: ISPRS Annals of the Photogrammetry, Remote Sensing and Spatial Information Sciences IV-2/W6, pp. 47–54. DOI: 10.5194/isprs-annals-IV-2-W6-47-2019

Dorozynski, M.; Wittich, D.; Rottensteiner, F. (2019): Deep Learning zur Analyse von Bildern von Seidenstoffen für Anwendungen im Kontext der Bewahrung des kulturellen Erbes. 39. Wissenschaftlich-Technische Jahrestagung der DGPF und Dreiländertagung der OVG, DGPF und SGPF in Wien, Publikationen der DGPF Band 28, 387-399.

Alba Pagán, E.; Gaitán Salvatella, M.; Pitarch, M. D.; León Muñoz, A.; Moya Toledo, M.; Marin Ruiz, J.; Vitella, M.; Lo Cicero, G.; Rottensteiner, F.; Dominic Clermont, D.; Dorozynski, M.; Wittich , D.; Vernus, P.; Puren, M. (2020): From silk to digital technologies: A gateway to new opportunities for creative industries, traditional crafts and designers. The SILKNOW case, In: Sustainability 12(19), paper 8279. DOI: 10.3390/su12198279

Schleider T., Troncy R., Ehrhart T., Dorozynski M., Rottensteiner F., Sebastián Lozano J., Lo Cicero, G. (2021): Searching silk fabrics by images leveraging on knowledge graph and domain expert rules. In: SUMAC'21: Proceedings of the 3rd Workshop on Structuring and Understanding of Multimedia heritAge, pp. 41–49. DOI: https://doi.org/10.1145/3475720.3484445